At 3spin we’ve developed many products around 360° videos, mainly for VR applications. One common problem when working with 360° videos in VR is sound and its perceived position in space. Background sound – like music – is not much of a challenge, because it does not depend on the users orientation in space. But voices and other sounds with a fix position are. When the user watches a video with stereo sound and looks to the right the sound does not change in any simple 360° player implementation. But it should to reflect the users change in orientation. Otherwise, sounds of objects that are now in front of the user will be perceived as if they came from the right. That effect can be highly disorienting. Also, sound can be a great tool for story telling and directing the users view – if implemented correctly.

There are quite a few solutions and workarounds for this problem. We looked out for a simple solution that would also work for our existing videos with simple stereo (left/right) sound.

Ambisonic sound

The cleanest solution would be to work with recorded surround sound. Ambisonic sound can deliver really great results. Some video players and even Facebook and Youtube support ambisonic sound embedded in video files. For this project however we had to consider all our existing videos with stereo sound which we simply cannot re-record.

Audio spatializer plugins and Unity 3D sound

There are many solutions to get really realistic 3D sound from Unity. Microsoft, Google and Oculus all have implemented their own spatializer plugins – all with room effects and other cool features. Using this plugins with our existing videos requires us to place virtual speakers left and right inside our Unity scene. This way the right speaker can play back the right stereo channel which then will have its fixed place in space. If the user turns around to look back, the spatializer plugins or the simple 3D sound provided by Unity then will transform the right channel sound so it will be perceived as “coming from the left”.

I liked this idea because it requires little coding and makes use of existing, very specialized technology instead of reinventing the wheel.

Unity does not provide an easy way to separate the left and right channel of an audio source and forward them to different audio sources. This however is the requirement to place them in 3D space. So we had to try different approaches to get things to work:

- We tried to add two audio tracks to our video – one only containing the left channel (right one muted) and one the other way around. The new Unity 5.6 video player should be able to connect every audio track of a video to a separate audio source. This approach failed because we had errors importing the video. I don’t know if this is an Unity bug or some encoding error on our side. But the idea is very promising and should be investigated further.

- We thought about separating the audio from the video. This way we could use one mono audio track for each channel and a video without sound. But in previous projects we’ve found that synchronized playback is by no means a trivial problem.

- We looked into the Native Audio Plugin SDK which almost certainly can be used to solve this problem. But we did not want the heavy overhead of native development for multiple platforms.

Audio filters

Unity provides the OnAudioFilterRead(float[], int) method that can be implemented for any MonoBehaviour. The float array contains audio data from either an audio source or an audio listener which can be manipulated. This is something we can work with. We attached a simple MonoBehaviour script implementing this method to test it with our videos. You may just insert some noise using random numbers to test if it does work. When we attached our test script to an audio source with a stereo test sound (to break down the problem we omitted the video at this point), everything worked fine. See the excellent article by Martin Climatiano to learn how audio filters work in Unity.

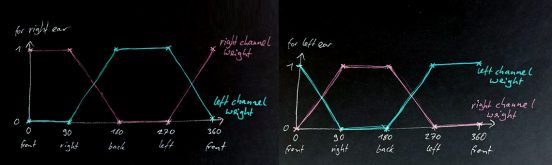

Next we started to implement a very, very basic spatializer for stereo sounds. Normally when you want to place sound in 3D space (and you want to do it “right”) you have to use psychoacoustic tricks like time differences between right ear and left ear sound and frequency changes for sound behind and in front of the user. We decided to go with the most naive implementation possible and test its results: We took the users current rotation around the y-axis to see if she is looking forward, right, backward or somewhere else. Based on this angle we weighted the audio data of right and left channel for each ear. When the user is looking forward, the right ear is hearing the right channel only and the left ear is hearing the left channel only. When she is looking backward, the channels are switched: The right ear is hearing the left channel only and the left ear is hearing the right channel only. When the user is looking straight to the right, both ears are hearing the right channel only. Other values we interpolated based on some diagrams we scribbled for visualization.

Again, this is a fairly naive implementation but it works quite well and gives some important clues on where the action is taking place. Also the filter does not require much computational power, which is a nice thing to know for mobile VR applications.

Again, this is a fairly naive implementation but it works quite well and gives some important clues on where the action is taking place. Also the filter does not require much computational power, which is a nice thing to know for mobile VR applications.

We fine tuned this filter further by using Unitys AnimationCurves to get a nicer, smooth interpolation and to be able to quickly change the channel weight for different directions. We found that muting a channel completely is not necessary when looking to the left or to the right. Being able to hear what’s going on behind you is a nice thing.

Audio filters and video plugins

Audio filters can be used for any audio source as long as it is connected to an audio clip. This is a problem, because Unity new video player can pipe its audio to an audio source – but there is no audio clip involved. Therefore we had to attach our filter to the audio listener. For our projects this isn’t much of a problem most of the time, because aside from the videos audio we do not use much sound. But for other projects, games, etc. this could become a severe limitation.

For our oldest projects we use the EasyMovieTexture plugin to play back videos. It too has the ability to pipe the audio to an audio clip which works fine inside the editor. On Android devices however, it produces system sound that cannot be manipulated by Unity. Therefore our solution does not work for this plugin (at least on Android).

With AVPro Video the Unity sound pipeline can be used for all windows systems (nice for Oculus Rift / HTC Vive applications) but again not for Android.

Next steps

We will wait for the Unity video player to stabilize for the next few months and continue fine tuning our audio filter once we can use the player in production projects. Until then I would love to hear of any plugins, scripts or other approaches that could solve this small but very specific problem!